A collaborative effort has revealed two extensive scientific datasets that could enhance AI systems’ ability to think across various disciplines – from celestial events to biological processes – a significant advancement towards machines that can make unexpected connections between seemingly unrelated fields.

Estimated reading time: 6 minutes

Imagine if artificial intelligence could think like a renaissance scholar, drawing insights from astronomy, biology, physics, and beyond. The Polymathic AI project has taken a significant step in this direction by releasing 115 terabytes of diverse scientific data – more than double the training data behind GPT-3 – carefully selected to help AI systems develop a multidisciplinary scientific understanding.

“These pioneering datasets are the most diverse and extensive collections of high-quality data ever compiled for machine learning training in these fields,” says Michael McCabe, a research engineer at New York City’s Flatiron Institute. “Curating these datasets is crucial for creating AI models that can span multiple disciplines and lead to new discoveries about our universe.”

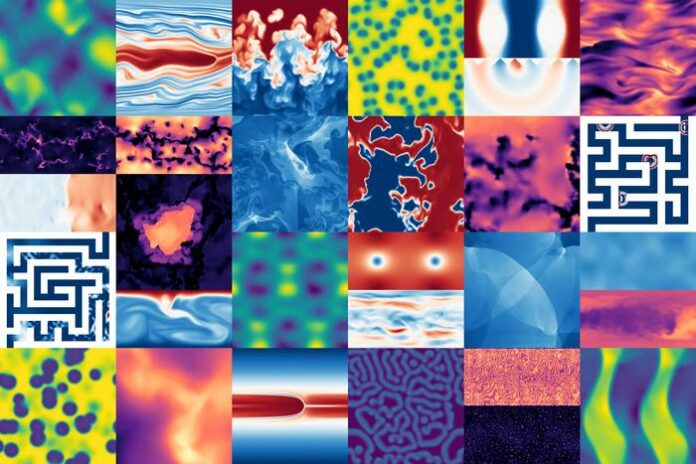

The project, named after the concept of polymaths, aims to embed cross-disciplinary thinking into AI systems instead of relying on individual geniuses. The datasets include a range of information, from galaxy images captured by the James Webb Space Telescope to simulations of biological and fluid systems.

“While machine learning has been used in astrophysics for about a decade, it remains challenging to apply it across different instruments, missions, and scientific fields,” explains Polymathic AI research scientist Francois Lanusse. “Datasets like the Multimodal Universe will help us build models that inherently understand various types of data and can serve as a versatile tool for astrophysics.”

The data is divided into two main collections: the Multimodal Universe, which contains 100 terabytes of astronomical data, and the Well collection, which includes 15 terabytes of numerical simulations representing complex processes such as supernova explosions and embryo development through partial differential equations – mathematical descriptions that are common in diverse scientific fields.

“These openly available datasets are an invaluable resource for developing advanced machine learning models that can address a wide range of scientific challenges,” says Ruben Ohana, a research fellow at the Flatiron Institute’s Center for Computational Mathematics. “The open-source nature of the machine learning community has fostered rapid progress compared to other fields.”

Glossary

- Polymathic AI

- Artificial intelligence systems designed to work across multiple scientific disciplines, similar to human polymaths who have expertise in many fields

- Machine Learning

- A type of artificial intelligence that improves automatically through experience and data analysis

- Partial Differential Equations

- Mathematical equations that describe many physical phenomena and appear repeatedly across different scientific fields

Test Your Knowledge

How large are the new datasets compared to GPT-3’s training data?

The new datasets total 115 terabytes, which is more than twice the size of GPT-3’s 45 terabytes of training data.

What are the two main collections in the released datasets?

The Multimodal Universe (100TB of astronomical data) and the Well (15TB of numerical simulations).

How do partial differential equations connect seemingly different scientific phenomena?

These equations appear in diverse processes from quantum mechanics to embryo development, providing mathematical descriptions that bridge different scientific fields.

What fundamental shift in AI development does this project represent compared to traditional scientific AI tools?

While traditional AI tools are purpose-built for specific applications, this project aims to develop truly polymathic models that can work across disciplines and find unexpected connections between fields.

Enjoy this story? Subscribe to our newsletter at scienceblog.substack.com.